Europa is scarred.

It’s surface is completely covered in surface fractures. There’s a few hypothesized sources of these crevasses, which can tell us more about it’s subsurface and interior.

Of course, identifying and characterizing thousands of ridges on Europa (at different scales, different viewing angles, different albedos, etc) is quite a difficult task. This is the point where I’d like to mention the projects that inspired mine.

First, the Global geologic map of Europa. (https://www.usgs.gov/maps/global-geologic-map-europa) This is an amazing effort (and the best we have to this day) of classifying basically every single feature on Europa. This map is the reason I did a research project on Cycloids (Nov-Dec 2024), it’s the reason I’m doing this project now, and it also provided the backbone of the next project:

LineaMapper. It answers the question asked by every startup CEO: “Why can’t you use AI for this?”. Yes. Yes, we can use ‘ai’ for this, and yes, someone already has. This project does not intend to do the same things LineaMapper does, and I don’t think I would be able to ‘beat’ this other project. If you’re looking for an actual documented, published, model to classify fractures on Europa, you can find the paper here

Now, the current goal of this project.

Europa’s fractures are called linea. This is a latin word for ‘line’, or ‘stripe’, and the linea on Europa are called this because they are lines. My intent for this project is to use ‘older’ line & edge detection techniques in order to classify the lines on Europa.

Here is what I hope to measure:

Here is what I am not doing:

For this project, I intend to use OpenCV for image analysis & Processing. The main features I will use are Canny edge detection and the Hough line transform.

Please review the links above for information about these.

Also, for most of this, I will be using

The first step is pre-processing the image. There’s a bunch of options for this, which I will be experimenting with.

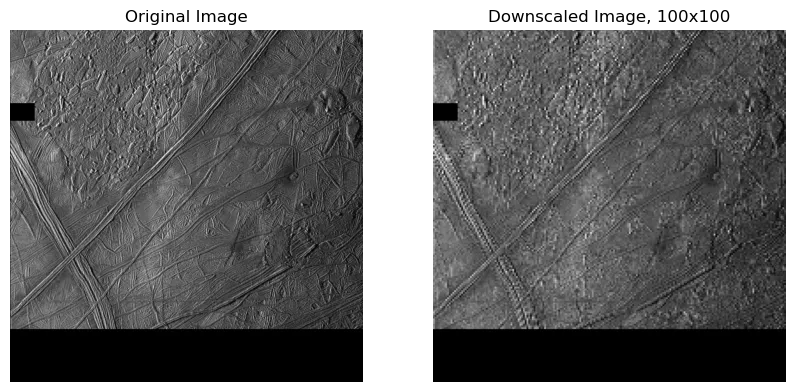

This does not help for image analysis, but downscaling all of my images into a constant resolution helps with keeping values of certain operations consistent between images.

It also helps with creating an ‘image pyramid’: Fractures on europa appear at ALL SHAPES AND SIZES. This means that my model might be good at finding fractures that take up 1/2 the image frame, but fails to do find fractures that take up only 1/10 of the image frame. The solution to this is to repeat the same processing techniques at different scales/resolutions. More information about this is in the wikipedia page.

Currently, there are no image pyramids. For now, using a constant resolution (200x200 or 500x500) is enough for me.

(This is an extreme case of downscaling to 100x100, just as an example) For reference, the original image I’m using has already been downscaled to 500x500.

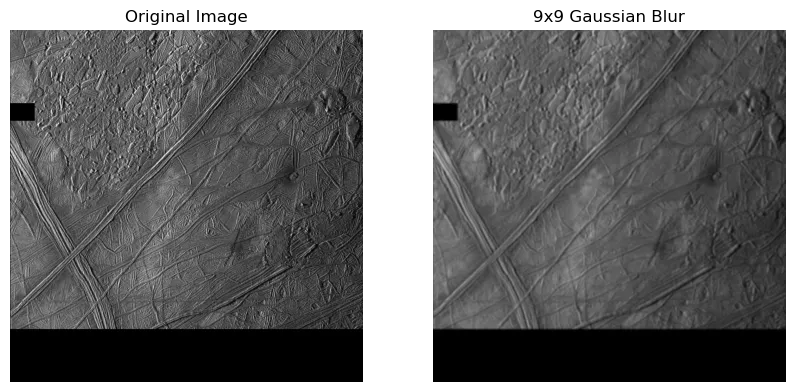

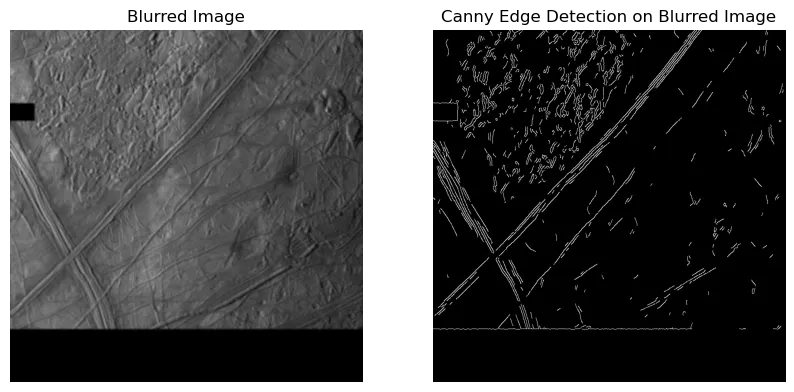

This is a method of blurring an image, where it basically averages the pixels with a gaussian distribution (aka bell curve, normal distribution).

This always results in a loss of detail - It’s typically used to erase noise - take a look at the chaos region at the top left of the image below.

(9x9 is an extreme case, but it shows the blurring affect quite well)

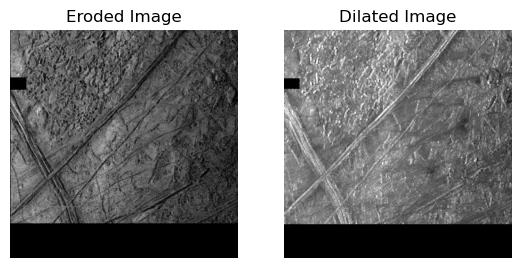

There are more ways to modify the image before processing, but I am not experienced enough to describe them. Here’s a good description of them.

Here’s where most of the actual processing is done. I am not an expert in these topics, I’m just using these tools, so I’ve linked descriptions of them in the sections.

https://en.wikipedia.org/wiki/Canny_edge_detector https://docs.opencv.org/4.x/da/d22/tutorial_py_canny.html

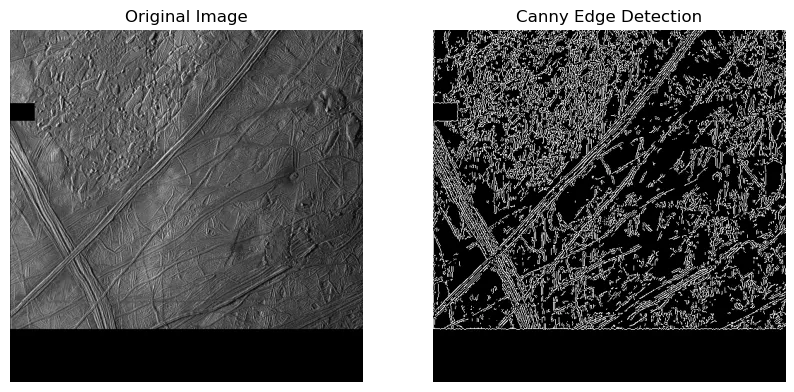

This method extracts edges from an image, using (surprisingly simple) math that is best explained by somebody else.

There are two parameters that can be adjusted, used during the hysteresis step. The lower threshold is used for linking nearby edges to each other. The higher threshold value is used for limiting the final result to only large features. Again, please check the links for more information.

In the second example above, I run canny edge detection on a blurred image. The first step in canny edge detection IS applying a gaussian blur, so the result has actually been blurred twice at this point. It’s a good example of how blurring the image in pre-processing can help isolate the important features.

https://en.wikipedia.org/wiki/Hough_transform#Detecting_lines https://docs.opencv.org/3.4/d9/db0/tutorial_hough_lines.html

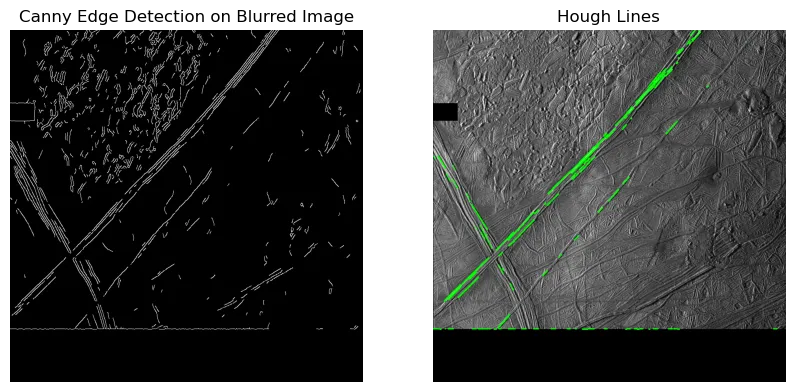

Hough transform is used here to identify which of the edges are actually lines. It’s more complicated than the canny edge detection, so please review the links above for more information.

There are also two types of hough transforms in openCV. I will explore both, but mostly I will be using HoughLinesP, as it gives two endpoints (more useful for me), instead of HoughLines.

The results are quite similar. What’s the difference?

Each of the lines from the Hough Transform are completely straight.

Of course, in this image, it’s currently identifying ~144 lines, which may be correct, but it currently thinks that these 2-3 long lines are a bunch of smaller, individual lines.

So, what’s next?

Currently, I will continue to work on tuning the parameters of these processing steps.

If you’d like to see the code used for this, please check the python notebook: Europa_filters.ipynb

There is one issue that I did not mention. Due to the difference in resolutions, viewing angles, albedos, etc, it’s likely that parameters used on one image will NOT work on another. I will be looking into techniques such as Otsu’s Method, along with perhaps training a model on selecting parameters for these! (not a CNN, as mentioned in the first section of this.)